You'll find several effective point cloud creation methods for UAV mapping. LiDAR scanning offers high precision, while Structure from Motion (SfM) and photogrammetry provide cost-effective alternatives. Stereo vision and Time-of-Flight cameras enable depth perception and real-time capture. RGB-D cameras combine color with depth sensing for detailed results. Structured light scanning excels in close-range applications, and interferometry techniques cover large areas. Laser pulse detection forms the core of many UAV-based systems. Each method has its strengths, and choosing the right one depends on your specific project needs. Exploring these options further will reveal how to optimize your UAV mapping capabilities.

Key Takeaways

- LiDAR scanning provides high-precision 3D point clouds with exceptional vertical accuracy, ideal for capturing ground elevation through vegetation.

- Photogrammetry and Structure from Motion (SfM) offer a cost-effective method using overlapping UAV imagery to generate dense point clouds.

- Stereo vision creates accurate point clouds using two cameras, relying on depth perception and precise camera calibration.

- Time-of-Flight (ToF) cameras enable real-time depth capture during flight, suitable for various lighting conditions in both indoor and outdoor environments.

- Multi-View Stereo (MVS) enhances photogrammetry by analyzing multiple overlapping images for complex surface reconstruction, producing millions of points per scene.

LiDAR Scanning

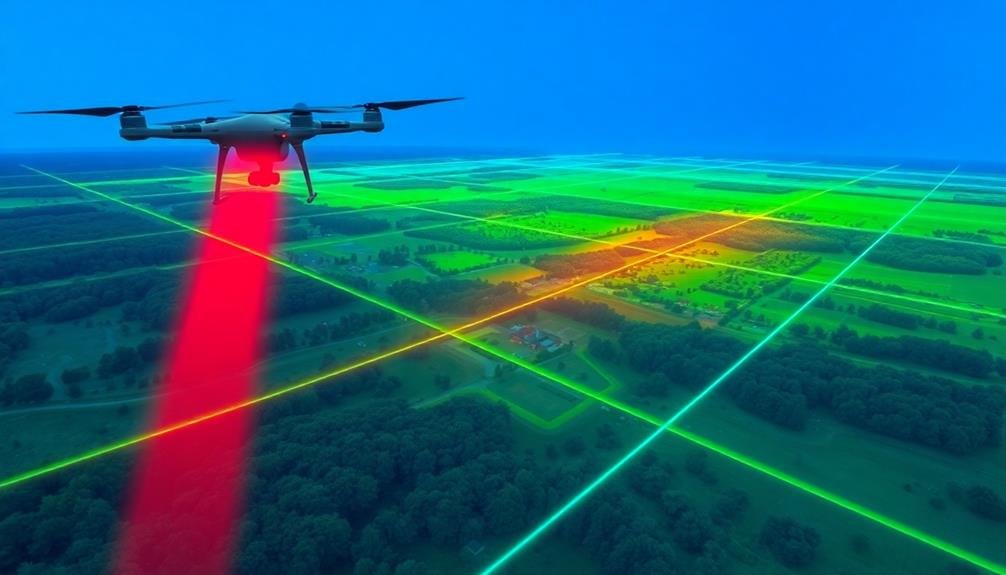

LiDAR scanning technology has revolutionized UAV mapping by enabling high-precision 3D point cloud creation. When you use LiDAR on a drone, you're employing a remote sensing method that uses laser light to measure distances. The LiDAR sensor emits rapid laser pulses, which bounce off surfaces and return to the sensor. By measuring the time it takes for the light to return, the system calculates the distance to each point.

As your drone flies, it captures millions of these measurements, creating a dense point cloud that accurately represents the terrain and objects below.

LiDAR's advantage lies in its ability to penetrate vegetation and capture ground elevation, making it ideal for mapping forests or areas with dense foliage. You'll find that LiDAR provides exceptional vertical accuracy, often within a few centimeters.

To use LiDAR effectively, you'll need to plan your flight paths carefully, ensuring proper overlap between passes. You'll also need to take into account factors like flying height, speed, and sensor specifications to optimize data collection.

Post-processing software helps you clean and classify the point cloud, transforming raw data into usable 3D models for various applications like topographic mapping, urban planning, or forestry management.

Structure From Motion (Sfm)

While LiDAR scanning offers high precision, Structure from Motion (SfM) provides a cost-effective alternative for creating point clouds from UAV imagery. This photogrammetric technique uses overlapping images captured by a drone to reconstruct 3D scenes.

You'll find that SfM algorithms can generate dense point clouds by identifying and matching common features across multiple photos.

To create a point cloud using SfM, you'll need to:

- Capture high-quality, overlapping images with your UAV

- Process the images using specialized SfM software

- Refine and enhance the resulting point cloud

SfM's main advantage is its accessibility, as it requires only a camera-equipped drone and software.

However, you should be aware that SfM point clouds may lack the same level of accuracy as LiDAR in areas with dense vegetation or uniform textures.

You'll also need to take into account factors like image resolution, flight altitude, and overlap percentage to guarantee superior results.

Despite these challenges, SfM remains a popular choice for many mapping applications due to its lower cost and ability to produce both point clouds and orthomosaic imagery from the same dataset.

Stereo Vision

Stereo vision, a key technique in UAV mapping, relies on depth perception to create accurate point clouds.

You'll need to perform camera calibration to guarantee precise measurements and correct for lens distortions.

To generate the point cloud, you'll employ point matching algorithms that identify corresponding features in overlapping images from different viewpoints.

Depth Perception Techniques

Depth perception's cornerstone in UAV mapping relies heavily on stereo vision techniques. You'll find that these methods simulate human binocular vision by using two cameras mounted on the UAV. As the drone flies, it captures overlapping images from slightly different angles, allowing for the calculation of depth information.

To understand stereo vision's role in depth perception, consider these key aspects:

- Disparity calculation: The system measures the difference in position of corresponding points between the left and right images.

- Triangulation: Using the known distance between cameras and the disparity, it computes the depth of each point.

- Epipolar geometry: This constrains the search for matching points to a single line, reducing computational complexity.

You'll notice that stereo vision excels in creating detailed 3D models of landscapes and structures. It's particularly effective in areas with good texture and contrast.

However, you should be aware of its limitations in homogeneous or reflective surfaces. To overcome these challenges, you can combine stereo vision with other techniques like structured light or time-of-flight sensors.

This multi-modal approach enhances the accuracy and robustness of your UAV mapping system, ensuring thorough depth perception across various environments.

Camera Calibration Process

Camera calibration forms the foundation of accurate stereo vision in UAV mapping. It's an essential step that guarantees your cameras capture precise geometric information for creating high-quality point clouds.

You'll need to determine your camera's intrinsic parameters, including focal length, principal point, and lens distortion coefficients.

To calibrate your cameras, you'll use a calibration pattern, typically a checkerboard or grid of known dimensions. You'll capture multiple images of this pattern from various angles and distances.

Specialized software then analyzes these images to calculate the camera parameters.

For stereo vision setups, you'll also need to determine extrinsic parameters, which define the relative position and orientation of the two cameras. This process involves capturing simultaneous images from both cameras and finding corresponding points.

Once calibrated, your cameras can accurately triangulate 3D positions of points in the scene. You'll need to recalibrate periodically, especially after any physical changes to the camera setup.

Point Matching Algorithms

The backbone of stereo vision lies in point matching algorithms. These algorithms are essential for creating accurate 3D point clouds from UAV imagery. They work by identifying corresponding points in overlapping images, allowing for the calculation of depth and position in 3D space.

You'll find that effective point matching is vital for producing high-quality maps and models from your UAV data.

Several popular point matching algorithms are used in UAV mapping:

- Scale-Invariant Feature Transform (SIFT): This algorithm detects and describes local features in images, making it robust to changes in scale, rotation, and illumination.

- Speeded Up Robust Features (SURF): A faster alternative to SIFT, SURF uses integral images and box filters to detect and describe features.

- Oriented FAST and Rotated BRIEF (ORB): This algorithm combines modified FAST keypoint detection with BRIEF descriptors, offering a computationally efficient solution for real-time applications.

When choosing a point matching algorithm for your UAV mapping project, consider factors such as processing speed, accuracy, and the specific requirements of your application.

You'll want to balance computational efficiency with the level of detail needed for your final point cloud.

Time-of-Flight (ToF) Cameras

Measuring distance with light, Time-of-Flight (ToF) cameras have emerged as a powerful tool for UAV mapping. These cameras emit short pulses of light and measure the time it takes for the light to bounce back from objects. By calculating this round-trip time, ToF cameras can accurately determine the distance to each point in the scene, creating a detailed 3D point cloud.

You'll find ToF cameras particularly useful for UAV mapping due to their compact size and low power consumption. They can capture depth information in real-time, allowing for rapid data acquisition during flight. ToF cameras work well in various lighting conditions and can operate in both indoor and outdoor environments.

When using ToF cameras for UAV mapping, you'll benefit from their high frame rates and ability to capture moving objects. This makes them ideal for dynamic scenes or when your UAV is in motion.

However, you should be aware of their limitations, such as potential interference from strong sunlight or reflective surfaces. To maximize accuracy, you'll need to take into account factors like flight altitude, camera resolution, and environmental conditions when planning your mapping missions.

Photogrammetry

Transforming 2D images into 3D models, photogrammetry is a cornerstone technique in UAV mapping. You'll find it's widely used due to its cost-effectiveness and ability to capture high-resolution data. By taking multiple overlapping photos from different angles, you're able to reconstruct detailed 3D representations of landscapes, buildings, and objects.

To create a point cloud using photogrammetry, you'll typically follow these steps:

- Data acquisition: Fly your UAV in a predetermined pattern, capturing images with significant overlap (usually 60-80% front overlap and 30-60% side overlap).

- Image alignment: Use specialized software to identify common points across multiple images and align them in 3D space.

- Dense cloud generation: The software then creates a dense point cloud by calculating the 3D coordinates of millions of points based on the aligned images.

You'll find that photogrammetry excels in capturing texture and color information, making it ideal for creating visually rich 3D models.

However, it's crucial to recognize that this method may struggle with reflective or uniform surfaces. You'll need to take into account lighting conditions and guarantee proper image overlap for the best results.

Multi-View Stereo (MVS)

While photogrammetry forms the foundation of many UAV mapping projects, Multi-View Stereo (MVS) takes the process a step further. MVS algorithms analyze multiple overlapping images to reconstruct highly detailed 3D models. Unlike traditional photogrammetry, MVS can handle complex surfaces and textures more effectively.

You'll find that MVS excels in creating dense point clouds, often producing millions of points per scene. This level of detail is essential for accurate representations of intricate structures or landscapes. MVS algorithms use pixel-level matching across multiple images, allowing for the reconstruction of fine details that might be missed by other methods.

When you're working with MVS, you'll need to guarantee sufficient image overlap and consistent lighting conditions. The quality of your results will depend heavily on the number and variety of viewpoints captured during your UAV flight. MVS is particularly useful for mapping urban environments, archaeological sites, and complex natural landscapes.

One downside you might encounter is the increased computational requirements of MVS compared to simpler photogrammetric techniques. However, the enhanced detail and accuracy often justify the additional processing time and resources needed.

Structured Light Scanning

Structured light scanning projects a known pattern onto a surface and analyzes its deformation to calculate 3D coordinates.

You'll find this method offers high precision and speed for close-range applications, particularly in controlled environments.

However, it's limited by ambient light interference and isn't well-suited for large-scale outdoor UAV mapping projects.

Working Principle Explained

Commonly, structured light scanning operates by projecting a known pattern of light onto an object or scene. This pattern, often consisting of stripes or grids, is then captured by a camera. As the light pattern deforms when projected onto the object's surface, the system can calculate depth and surface information based on these distortions.

You'll find that structured light scanning offers several advantages for UAV mapping:

- High accuracy: It can achieve sub-millimeter precision in ideal conditions

- Speed: Capable of capturing thousands of points per second

- Versatility: Works well on various surfaces, including those with complex geometries

When you're using structured light scanning for UAV mapping, you'll need to take into account factors like ambient light, object reflectivity, and distance from the target.

The scanner projects the light pattern, while the UAV's onboard camera captures the reflected light. Specialized software then processes this data, triangulating the position of each point to create a detailed 3D point cloud.

You'll notice that this method excels in capturing fine details and textures, making it particularly useful for applications requiring high-resolution 3D models of landscapes, buildings, or archaeological sites.

Advantages and Limitations

The advantages and limitations of structured light scanning for UAV mapping are crucial to understand when evaluating this method.

You'll find that structured light scanning offers high accuracy and resolution, allowing you to capture fine details of surfaces and objects. It's particularly effective for close-range mapping and can produce dense point clouds quickly. This technique works well in various lighting conditions and can be used for both indoor and outdoor applications.

However, structured light scanning has limitations you should evaluate.

It's less effective for large-scale mapping due to its limited range, typically a few meters. You'll need to fly your UAV closer to the target, which may not be feasible in all situations. The method can struggle with highly reflective or transparent surfaces, potentially leading to data gaps or inaccuracies.

Additionally, the equipment for structured light scanning can be more expensive than other point cloud creation methods. You'll also need to evaluate that this technique may require more processing power and storage capacity due to the high-density data it produces.

Despite these limitations, structured light scanning remains a valuable tool for specific UAV mapping applications where precision is paramount.

RGB-D Cameras

RGB-D camera technology combines color imaging with depth sensing, offering a powerful tool for UAV mapping applications. These cameras capture both color information and depth data for each pixel, allowing you to create detailed 3D point clouds with accurate spatial relationships.

You'll find that RGB-D cameras are particularly useful for indoor mapping and close-range outdoor environments where precise depth information is essential.

When using RGB-D cameras for UAV mapping, you should consider the following advantages:

- Real-time 3D mapping: RGB-D cameras enable on-the-fly point cloud generation, making them ideal for rapid surveying and inspection tasks.

- High-resolution color data: The integrated RGB sensor provides rich color information, enhancing the visual quality of your point clouds.

- Improved object recognition: The combination of color and depth data facilitates more accurate object detection and classification in your maps.

However, RGB-D cameras do have limitations. Their range is typically limited to a few meters, making them less suitable for large-scale outdoor mapping.

Additionally, they're sensitive to ambient light conditions and may struggle in bright sunlight or low-light environments. Despite these drawbacks, RGB-D cameras remain a valuable option for specific UAV mapping scenarios, particularly when detailed close-range data is required.

Interferometry

While RGB-D cameras excel at close-range mapping, interferometry offers a different approach for creating high-precision point clouds over larger areas. This technique uses the interference of electromagnetic waves to measure distances and create detailed 3D models of terrain and structures.

In UAV mapping, you'll typically use synthetic aperture radar (SAR) interferometry. It works by comparing two or more SAR images of the same area taken from slightly different positions. The phase differences between these images allow you to calculate precise elevation data.

Here's a comparison of different interferometry techniques used in UAV mapping:

| Technique | Range | Accuracy | Best Applications |

|---|---|---|---|

| SAR | km | cm-scale | Terrain mapping |

| LiDAR | 100m | mm-scale | Urban environments |

| Optical | 100m | μm-scale | Structural details |

You'll find interferometry particularly useful for mapping large areas with high precision, even in challenging weather conditions. It's especially effective for monitoring subtle changes in terrain over time, making it valuable for applications like disaster assessment, glacier monitoring, and infrastructure inspection.

Laser Pulse Detection

Beyond interferometry, laser pulse detection stands as a cornerstone technique in UAV-based point cloud creation. This method involves emitting short laser pulses and measuring the time it takes for them to return after hitting objects. You'll find that laser pulse detection offers high accuracy and can penetrate vegetation, making it ideal for mapping complex terrains.

When using laser pulse detection for UAV mapping, you'll need to take into account several key factors:

- Pulse rate: Higher rates allow for denser point clouds but require more processing power.

- Scan angle: Wider angles cover more area but may reduce point density.

- Flight altitude: Lower altitudes increase point density but limit coverage area.

You'll achieve the best results by optimizing these parameters based on your specific mapping requirements.

Laser pulse detection systems on UAVs typically use LiDAR sensors, which can capture millions of points per second. This high-resolution data enables you to create detailed 3D models of landscapes, buildings, and infrastructure.

While laser pulse detection excels in accuracy, it's crucial to recognize that it can be more expensive than other point cloud creation methods. However, for many applications, the precision and versatility it offers make it a worthwhile investment.

Frequently Asked Questions

How Do Weather Conditions Affect Point Cloud Accuracy in UAV Mapping?

Weather greatly impacts your point cloud accuracy. Wind can cause UAV instability, affecting image overlap. Rain and fog reduce visibility and data quality. Extreme temperatures may affect sensor performance. Sunlight changes can alter shadows and reflections.

What Are the Legal Restrictions for Using UAVS in Point Cloud Creation?

You'll need to comply with local UAV regulations, which often include registration, pilot certification, and flight restrictions. You can't fly over people, near airports, or in restricted airspace without special permissions. Always check current laws before operating.

How Does Battery Life Impact the Choice of Point Cloud Creation Method?

Battery life directly affects your choice of point cloud creation method. You'll need to balance flight time with data collection density. Shorter flights may require more efficient methods, while longer battery life allows for more thorough data gathering.

Can Point Clouds From Different Methods Be Combined for Better Results?

Yes, you can combine point clouds from different methods for improved results. You'll often get better coverage and detail by merging data from various techniques. It's called fusion and can enhance the overall quality of your 3D model.

What Software Is Best for Processing and Analyzing Uav-Generated Point Clouds?

You'll find several great options for processing UAV point clouds. Popular choices include Pix4D, Agisoft Metashape, and CloudCompare. They offer robust tools for analysis, visualization, and manipulation. Your specific project needs will determine the best fit for you.

In Summary

You've now explored the top 10 methods for creating point clouds in UAV mapping. Each technique offers unique advantages, from LiDAR's accuracy to photogrammetry's cost-effectiveness. As you choose the best approach for your project, consider factors like required precision, budget constraints, and environmental conditions. Remember, combining multiple methods can often yield the most thorough results. Keep experimenting and refining your skills to stay at the forefront of UAV mapping technology.

As educators and advocates for responsible drone use, we’re committed to sharing our knowledge and expertise with aspiring aerial photographers.

Leave a Reply